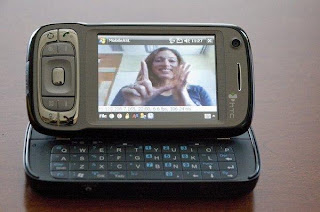

Professors usually don’t ask everyone in a class of 300 students to shout out answers to a question at the same time. But a new application for the iPhone lets a roomful of students beam in answers that can be quietly displayed on a screen to allow instant group feedback. The application was developed by programmers at Abilene Christian University, which handed out free iPhones and iPod Touch devices to all first-year students this year. The university was the first to do such a large-scale iPhone handout, and officials there have been experimenting with ways to use the gadgets in the classroom. The application lets professors set up instant polls in various formats. They can ask true-or-false questions or multiple-choice questions, and they can allow for free-form responses. The software can quickly sort and display the answers so that a professor can view responses privately or share them with the class by projecting them on a screen. For open-ended questions, the software can display answers in “cloud” format, showing frequently-repeated answers in large fonts and less-frequent answers in smaller ones.

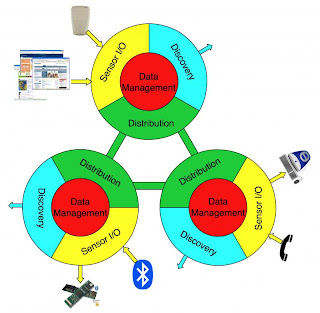

The idea for such a system is far from new. Several companies sell classroom response systems, often called “clickers,” that often involve small wireless gadgets that look like television remote controls. Most clickers allow students to answer true-or-false or multiple-choice questions (but do not allow open-ended feedback), and many colleges have experimented with the devices, especially in large lecture courses. There are several drawbacks to many clicker systems, however. First of all, every student in a course must have one of the devices, so in courses that use clickers, students are often required to buy them. Then, students have to remember to bring the gadgets to class, which doesn’t always happen. Using cellphones instead of dedicated clicker devices solves those issues. Because students rely on their phones for all kinds of communication, they usually keep the devices on hand. The university calls its iPhone software NANOtools — NANO stands for No Advanced Notice, emphasizing how easy the system is for students and professors to use. Some companies that make clickers, such as TurningPoint, are starting to sell similar software to turn smartphones into student feedback systems as well.

More information:

http://chronicle.com/wiredcampus/article/3518/mobile-college-app-turning-iphones-into-super-clickers-for-classroom-feedback

More information:

http://chronicle.com/wiredcampus/article/3518/mobile-college-app-turning-iphones-into-super-clickers-for-classroom-feedback