This month, HCI Lab researchers and

colleagues from iMareCulture have published a peer-review paper at Frontiers in

Robotics and AI entitled "Impact of Dehazing on Underwater Marker

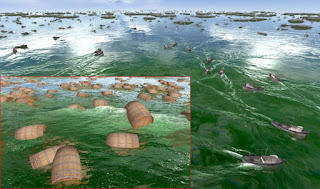

Detection for Augmented Reality". The paper describes the visibility

conditions affecting underwater scenes and shows existing dehazing techniques

that successfully improve the quality of underwater images. Four underwater

dehazing methods are selected for evaluation of their capability of improving

the success of square marker detection in underwater videos. Two reviewed

methods represent approaches of image restoration: Multi-Scale Fusion, and

Bright Channel Prior.

Another two methods evaluated,

the Automatic Color Enhancement and the Screened Poisson Equation, are methods

of image enhancement. The evaluation uses diverse test data set to evaluate

different environmental conditions. Results of the evaluation show an increased

number of successful marker detections in videos pre-processed by dehazing

algorithms and evaluate the performance of each compared method. The Screened

Poisson method performs slightly better to other methods across various tested

environments, while Bright Channel Prior and Automatic Color Enhancement shows

similarly positive results.

More information: