Carnegie Mellon University

researchers have found ways to track body movements and detect shape changes

using arrays of RFID tags. RFID-embedded clothing thus could be used to control

avatars in video games. Or embedded clothing could to tell you when you should

sit up straight. Researchers devised for tracking the tags, and thus monitoring

movements and shapes. RFID tags reflect certain radio frequencies. It would be

possible to use multiple antennas to track this backscatter and triangulate the

locations of the tags.

Rather, the CMU researchers

showed they could use a single, mobile antenna to monitor an array of tags

without any prior calibration. Just how this works varies based on whether the

tags are being used to track the body's skeletal positions or to track changes

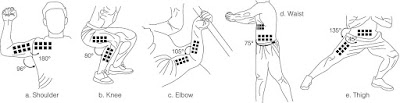

in shape. For body movement tracking, arrays of RFID tags are positioned on

either side of the knee, elbow or other joints. By keeping track of the

ever-so-slight differences in when the backscattered radio signals from each

tag reach the antenna, it's possible to calculate the angle of bend in a joint.

More information: