Neuralink tries to build an

interface that enables someone's brain to control a smartphone or computer, and

to make this process as safe and routine as Lasik surgery. Currently, Neuralink

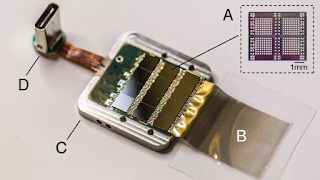

has only experimented on animals. In these experiments, the company used a

surgical robot to embed into a rat brain a tiny probe with about 3,100

electrodes on some 100 flexible wires or threads — each of which is

significantly smaller than a human hair.

This device can record the

activity of neurons, which could help scientists learn more about the functions

of the brain, specifically in the realm of disease and degenerative disorders.

This device has been tested on at least one monkey, who was able to control a

computer with its brain. Neuralink's experiments involve embedding a probe into

the animal's brain through invasive surgery with a sewing machine-like robot

that drills holes into the skull.

More information: